Problem:

I have many different URLs in database.

From many sites.

I dont know how these sites work and url structure.

So I need to get 500 URLs from each site then compare and group it by common static part.

Which should be automatically merged via replacing with {var} any dynamic URL parts.

And then get ~10 urls as result.

Final result: reduce database size

Solution:

Here is some kind Proof of Concept 🙂

Example with splitting URL by «?»

— Parse parameters.

— Calculate frequency for unique parameter values.

— Get Nth percentile.

— Build URLs and replace parameters which frequency is more than Nth percentile

For small data like here in 50 percentile is enough to group some URL.

For «big real data» 90-95 percentile.

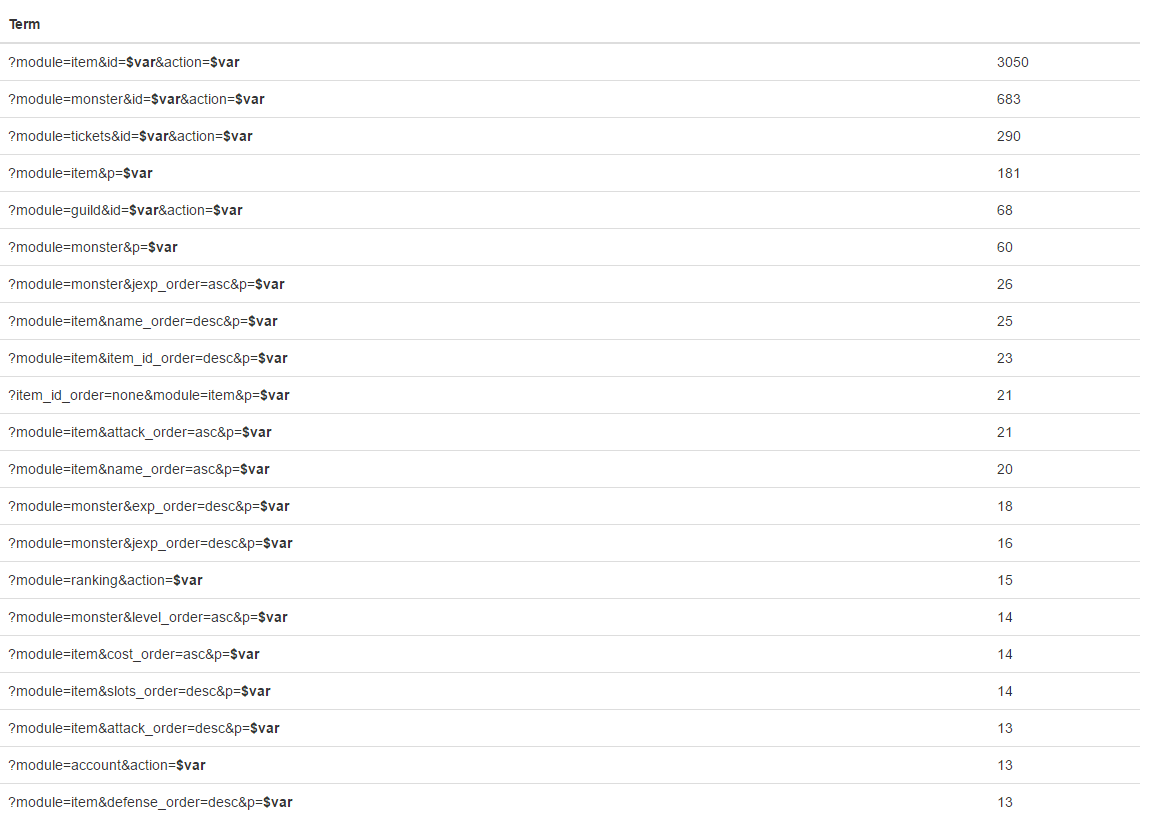

For example: I use 90 percentile for 5000 links ->

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 |

<?php $stats = []; $pages = [ (object)['page' => 'http://example.com/?page=123'], (object)['page' => 'http://example.com/?page=123'], (object)['page' => 'http://example.com/?page=123'], (object)['page' => 'http://example.com/?page=321'], (object)['page' => 'http://example.com/?page=321'], (object)['page' => 'http://example.com/?page=321'], (object)['page' => 'http://example.com/?page=qwas'], (object)['page' => 'http://example.com/?page=safa15'], ]; // array of objects with page property = URL $params_counter = []; foreach ($pages as $page) { $components = explode('?', $page->page); if (!empty($components[1])) { parse_str($components[1], $params); foreach ($params as $key => $val) { if (!isset($params_counter[$key][$val])) { $params_counter[$key][$val] = 0; } $params_counter[$key][$val]++; } } } function procentile($percentile, $array) { sort($array); $index = ($percentile/100) * count($array); if (floor($index) == $index) { $result = ($array[$index-1] + $array[$index])/2; } else { $result = $array[floor($index)]; } return $result; } $some_data = []; foreach ($params_counter as $key => $val) { $some_data[$key] = count($val); } $procentile = procentile(90, $some_data); foreach ($pages as $page) { $components = explode('?', $page->page); if (!empty($components[1])) { parse_str($components[1], $params); arsort($params); foreach ($params as $key => $val) { if ($some_data[$key] > $procentile) { $params[$key] = '$var'; } } arsort($params); $pattern = http_build_query($params); $new_url = urldecode('?'.$pattern); if (!isset($stats[$new_url])) { $stats[$new_url] = 0; } $stats[$new_url]++; } } arsort($stats); var_dump($stats); |